Add juan contribution

This commit is contained in:

@@ -0,0 +1 @@

|

||||

OPENAI_API_KEY= your_openai_api_kei

|

||||

@@ -0,0 +1 @@

|

||||

3.12

|

||||

254

week3/community-contributions/juan_synthetic_data/README.md

Normal file

254

week3/community-contributions/juan_synthetic_data/README.md

Normal file

@@ -0,0 +1,254 @@

|

||||

# Synthetic Data Generator

|

||||

**NOTE:** This is a copy of the repository https://github.com/Jsrodrigue/synthetic-data-creator.

|

||||

|

||||

An intelligent synthetic data generator that uses OpenAI models to create realistic tabular datasets based on reference data. This project includes an intuitive web interface built with Gradio.

|

||||

|

||||

> **🎓 Educational Project**: This project was inspired by the highly regarded LLM Engineering course on Udemy: [LLM Engineering: Master AI and Large Language Models](https://www.udemy.com/course/llm-engineering-master-ai-and-large-language-models/learn/lecture/52941433#questions/23828099). It demonstrates practical applications of LLM engineering principles, prompt engineering, and synthetic data generation techniques.

|

||||

|

||||

## Key highlights:

|

||||

- Built with Python & Gradio

|

||||

- Uses OpenAI GPT-4 models for tabular data synthesis

|

||||

- Focused on statistical consistency and controlled randomness

|

||||

- Lightweight and easy to extend

|

||||

|

||||

## 📸 Screenshots & Demo

|

||||

|

||||

### Application Interface

|

||||

<p align="center">

|

||||

<img src="screenshots/homepage.png" alt="Main Interface" width="70%">

|

||||

</p>

|

||||

<p align="center"><em>Main interface showing the synthetic data generator with all controls</em></p>

|

||||

|

||||

### Generated Data Preview

|

||||

<p align="center">

|

||||

<img src="screenshots/generated_table.png" alt="Generated table" width="70%">

|

||||

</p>

|

||||

<p align="center"><em> Generated CSV preview with the Wine dataset reference</em></p>

|

||||

|

||||

### Histogram plots

|

||||

<p align="center">

|

||||

<img src="screenshots/histogram.png" alt="Histogram plot" width="70%">

|

||||

</p>

|

||||

<p align="center"><em>Example of Histogram comparison plot in the Wine dataset</em></p>

|

||||

|

||||

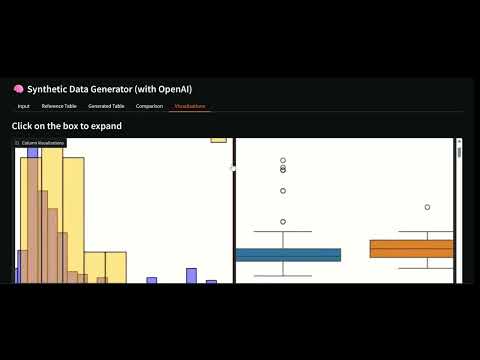

### Boxplots

|

||||

<p align="center">

|

||||

<img src="screenshots/boxplot.png" alt="Boxplot" width="70%">

|

||||

</p>

|

||||

<p align="center"><em>Example of Boxplot comparison</em></p>

|

||||

|

||||

|

||||

### Video Demo

|

||||

[](https://youtu.be/C7c8BbUGGBA)

|

||||

|

||||

*Click to watch a complete walkthrough of the application*

|

||||

|

||||

|

||||

## 📋 Features

|

||||

|

||||

- **Intelligent Generation**: Generates synthetic data using OpenAI models (GPT-4o-mini, GPT-4.1-mini)

|

||||

- **Web Interface**: Provides an intuitive Gradio UI with real-time data preview

|

||||

- **Reference Data**: Optionally load CSV files to preserve statistical distributions

|

||||

- **Export Options**: Download generated datasets directly in CSV format

|

||||

- **Included Examples**: Comes with ready-to-use sample datasets for people and sentiment analysis

|

||||

- **Dynamic Batching**: Automatically adapts batch size based on prompt length and reference sample size

|

||||

- **Reference Sampling**: Uses random subsets of reference data to ensure variability and reduce API cost.

|

||||

The sample size (default `64`) can be modified in `src/constants.py` via `N_REFERENCE_ROWS`.

|

||||

|

||||

## 🚀 Installation

|

||||

|

||||

### Prerequisites

|

||||

- Python 3.12+

|

||||

- OpenAI account with API key

|

||||

|

||||

### Installation with pip

|

||||

```bash

|

||||

# Create virtual environment

|

||||

python -m venv venv

|

||||

source venv/bin/activate # On Windows: venv\Scripts\activate

|

||||

|

||||

# Install dependencies

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

### Installation with uv

|

||||

```bash

|

||||

# Clone the repository

|

||||

git clone https://github.com/Jsrodrigue/synthetic-data-creator.git

|

||||

cd synthetic_data

|

||||

|

||||

# Install dependencies

|

||||

uv sync

|

||||

|

||||

# Activate virtual environment

|

||||

uv shell

|

||||

```

|

||||

|

||||

### Configuration

|

||||

1. Copy the environment variables example file:

|

||||

```bash

|

||||

cp .env_example .env

|

||||

```

|

||||

|

||||

2. Edit `.env` and add your OpenAI API key:

|

||||

```

|

||||

OPENAI_API_KEY=your_api_key_here

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 🎯 Usage

|

||||

|

||||

### Start the application

|

||||

```bash

|

||||

python app.py

|

||||

```

|

||||

|

||||

The script will print a local URL (e.g., http://localhost:7860) — open that link in your browser.

|

||||

|

||||

### How to use the interface

|

||||

|

||||

1. **Configure Prompts**:

|

||||

- **System Prompt**: Uses the default rules defined in `src/constants.py` or can be edited there for custom generation.

|

||||

- **User Prompt**: Specifies what type of data to generate (default: 15 rows, defined in `src/constants.py`).

|

||||

|

||||

|

||||

2. **Select Model**:

|

||||

- `gpt-4o-mini`: Faster and more economical

|

||||

- `gpt-4.1-mini`: Higher reasoning capacity

|

||||

|

||||

3. **Load Reference Data** (optional):

|

||||

- Upload a CSV file with similar data

|

||||

- Use included examples: `people_reference.csv`, `sentiment_reference.csv` or `wine_reference.csv`

|

||||

|

||||

4. **Generate Data**:

|

||||

- Click "🚀 Generate Data"

|

||||

- Review results in the gradio UI

|

||||

- Download the generated CSV

|

||||

|

||||

|

||||

|

||||

## 📊 Quality Evaluation

|

||||

|

||||

### Simple Evaluation System

|

||||

|

||||

The project includes a simple evaluation system focused on basic metrics and visualizations:

|

||||

|

||||

#### Features

|

||||

- **Simple Metrics**: Basic statistical comparisons and quality checks

|

||||

- **Integrated Visualizations**: Automatic generation of comparison plots in the app

|

||||

- **Easy to Understand**: Clear scores and simple reports

|

||||

- **Scale Invariant**: Works with datasets of different sizes

|

||||

- **Temporary Files**: Visualizations are generated in temp files and cleaned up automatically

|

||||

|

||||

|

||||

|

||||

## 🛠️ Improvements and Next Steps

|

||||

|

||||

### Immediate Improvements

|

||||

|

||||

1. **Advanced Validation**:

|

||||

- Implement specific validators by data type

|

||||

- Create evaluation reports

|

||||

|

||||

2. **Advanced Quality Metrics**

|

||||

- Include more advanced metrics to compare multivariate similarity (for future work), e.g.:

|

||||

- C2ST (Classifier Two‑Sample Test): train a classifier to distinguish real vs synthetic — report AUROC (ideal ≈ 0.5).

|

||||

- MMD (Maximum Mean Discrepancy): kernel-based multivariate distance.

|

||||

- Multivariate Wasserstein / Optimal Transport: joint-distribution distance (use POT).

|

||||

|

||||

3. **More Models**:

|

||||

- Integrate Hugging Face models

|

||||

- Support for local models (Ollama)

|

||||

- Comparison between different models

|

||||

|

||||

### Advanced Features

|

||||

|

||||

1. **Conditional Generation**:

|

||||

- Data based on specific conditions

|

||||

- Controlled outlier generation

|

||||

- Maintaining complex relationships

|

||||

|

||||

2. **Privacy Analysis**:

|

||||

- Differential privacy metrics

|

||||

- Sensitive data detection

|

||||

- Automatic anonymization

|

||||

|

||||

3. **Database Integration**:

|

||||

- Direct database connection

|

||||

- Massive data generation

|

||||

- Automatic synchronization

|

||||

|

||||

### Scalable Architecture

|

||||

|

||||

1. **REST API**:

|

||||

- Endpoints for integration

|

||||

- Authentication and rate limiting

|

||||

- OpenAPI documentation

|

||||

|

||||

2. **Asynchronous Processing**:

|

||||

- Work queues for long generations

|

||||

- Progress notifications

|

||||

- Robust error handling

|

||||

|

||||

3. **Monitoring and Logging**:

|

||||

- Usage and performance metrics

|

||||

- Detailed generation logs

|

||||

- Quality alerts

|

||||

|

||||

## 📁 Project Structure

|

||||

|

||||

```

|

||||

synthetic_data/

|

||||

├── app.py # Main Gradio application for synthetic data generation

|

||||

├── README.md # Project documentation

|

||||

├── pyproject.toml # Project configuration

|

||||

├── requirements.txt # Python dependencies

|

||||

├── data/ # Reference CSV datasets used for generating synthetic data

|

||||

│ ├── people_reference.csv

|

||||

│ ├── sentiment_reference.csv

|

||||

│ └── wine_reference.csv

|

||||

├── notebooks/ # Jupyter notebooks for experiments and development

|

||||

│ └── notebook.ipynb

|

||||

├── src/ # Python source code

|

||||

│ ├── __init__.py

|

||||

├── constants.py # Default constants, reference sample size, and default prompts

|

||||

│ ├── data_generation.py # Core functions for batch generation and evaluation

|

||||

│ ├── evaluator.py # Evaluation logic and metrics

|

||||

│ ├── IO_utils.py # Utilities for file management and temp directories

|

||||

│ ├── openai_utils.py # Wrappers for OpenAI API calls

|

||||

│ └── plot_utils.py

|

||||

# Functions to create visualizations from data

|

||||

└── temp_plots/ # Temporary folder for generated plot images (auto-cleaned)

|

||||

```

|

||||

|

||||

## 📄 License

|

||||

|

||||

This project is under the MIT License. See the `LICENSE` file for more details.

|

||||

|

||||

|

||||

|

||||

|

||||

## 🎓 Course Context & Learning Outcomes

|

||||

|

||||

This project was developed as part of the [LLM Engineering: Master AI and Large Language Models](https://www.udemy.com/course/llm-engineering-master-ai-and-large-language-models/learn/lecture/52941433#questions/23828099) course on Udemy. It demonstrates practical implementation of:

|

||||

|

||||

### Key Learning Objectives:

|

||||

- **Prompt Engineering Mastery**: Creating effective system and user prompts for consistent outputs

|

||||

- **API Integration**: Working with OpenAI's API for production applications

|

||||

- **Data Processing**: Handling JSON parsing, validation, and error management

|

||||

- **Web Application Development**: Building user interfaces with Gradio

|

||||

|

||||

### Course Insights Applied:

|

||||

- **Why OpenAI over Open Source**: This project was developed as an alternative to open-source models due to consistency issues in prompt following with models like Llama 3.2. OpenAI provides more reliable and faster results for this specific task.

|

||||

- **Production Considerations**: Focus on error handling, output validation, and user experience

|

||||

- **Scalability Planning**: Architecture designed for future enhancements and integrations

|

||||

|

||||

### Related Course Topics:

|

||||

- Prompt engineering techniques

|

||||

- LLM API integration and optimization

|

||||

- Selection of best models for each usecase.

|

||||

|

||||

---

|

||||

|

||||

**📚 Course Link**: [LLM Engineering: Master AI and Large Language Models](https://www.udemy.com/course/llm-engineering-master-ai-and-large-language-models/learn/lecture/52941433#questions/23828099)

|

||||

131

week3/community-contributions/juan_synthetic_data/app.py

Normal file

131

week3/community-contributions/juan_synthetic_data/app.py

Normal file

@@ -0,0 +1,131 @@

|

||||

import gradio as gr

|

||||

import os

|

||||

import atexit

|

||||

from src.IO_utils import cleanup_temp_files

|

||||

from src.data_generation import generate_and_evaluate_data

|

||||

from src.plot_utils import display_reference_csv

|

||||

from dotenv import load_dotenv

|

||||

import openai

|

||||

from src.constants import PROJECT_TEMP_DIR, SYSTEM_PROMPT, USER_PROMPT

|

||||

|

||||

# ==========================================================

|

||||

# Setup

|

||||

# ==========================================================

|

||||

|

||||

#Load the api key

|

||||

load_dotenv()

|

||||

openai.api_key = os.getenv("OPENAI_API_KEY")

|

||||

|

||||

# Temporary folder for images

|

||||

os.makedirs(PROJECT_TEMP_DIR, exist_ok=True)

|

||||

|

||||

# Ensure temporary plot images are deleted when the program exits

|

||||

atexit.register(lambda: cleanup_temp_files(PROJECT_TEMP_DIR))

|

||||

|

||||

# ==========================================================

|

||||

# Gradio App

|

||||

# ==========================================================

|

||||

with gr.Blocks() as demo:

|

||||

|

||||

# Store temp folder in state

|

||||

temp_dir_state = gr.State(value=PROJECT_TEMP_DIR)

|

||||

|

||||

gr.Markdown("# 🧠 Synthetic Data Generator (with OpenAI)")

|

||||

|

||||

# ======================================================

|

||||

# Tabs for organized sections

|

||||

# ======================================================

|

||||

with gr.Tabs():

|

||||

|

||||

# ------------------------------

|

||||

# Tab 1: Input

|

||||

# ------------------------------

|

||||

with gr.Tab("Input"):

|

||||

|

||||

# System prompt in collapsible

|

||||

with gr.Accordion("System Prompt (click to expand)", open=False):

|

||||

system_prompt_input = gr.Textbox(

|

||||

label="System Prompt",

|

||||

value=SYSTEM_PROMPT,

|

||||

lines=20

|

||||

)

|

||||

|

||||

# User prompt box

|

||||

user_prompt_input = gr.Textbox(label="User Prompt", value=USER_PROMPT, lines=5)

|

||||

|

||||

# Model selection

|

||||

model_select = gr.Dropdown(

|

||||

label="OpenAI Model",

|

||||

choices=["gpt-4o-mini", "gpt-4.1-mini"],

|

||||

value="gpt-4o-mini"

|

||||

)

|

||||

|

||||

# Reference CSV upload

|

||||

reference_input = gr.File(label="Reference CSV (optional)", file_types=[".csv"])

|

||||

|

||||

# Examples

|

||||

gr.Examples(

|

||||

examples=["data/sentiment_reference.csv","data/people_reference.csv","data/wine_reference.csv"],

|

||||

inputs=reference_input

|

||||

)

|

||||

|

||||

# Generate button

|

||||

generate_btn = gr.Button("🚀 Generate Data")

|

||||

|

||||

# Download button

|

||||

download_csv = gr.File(label="Download CSV")

|

||||

|

||||

# ------------------------------

|

||||

# Tab 2: Reference Table

|

||||

# ------------------------------

|

||||

with gr.Tab("Reference Table"):

|

||||

reference_display = gr.DataFrame(label="Reference CSV Preview")

|

||||

|

||||

# ------------------------------

|

||||

# Tab 3: Generated Table

|

||||

# ------------------------------

|

||||

with gr.Tab("Generated Table"):

|

||||

output_df = gr.DataFrame(label="Generated Data")

|

||||

|

||||

|

||||

# ------------------------------

|

||||

# Tab 4: Evaluation

|

||||

# ------------------------------

|

||||

with gr.Tab("Comparison"):

|

||||

with gr.Accordion("Evaluation Results (click to expand)", open=True):

|

||||

evaluation_df = gr.DataFrame(label="Evaluation Results")

|

||||

|

||||

# ------------------------------

|

||||

# Tab 5: Visualizations

|

||||

# ------------------------------

|

||||

|

||||

with gr.Tab("Visualizations"):

|

||||

gr.Markdown("# Click on the box to expand")

|

||||

|

||||

images_gallery = gr.Gallery(

|

||||

label="Column Visualizations",

|

||||

show_label=True,

|

||||

columns=2,

|

||||

height='auto',

|

||||

interactive=True

|

||||

)

|

||||

|

||||

# Hidden state for internal use

|

||||

generated_state = gr.State()

|

||||

|

||||

# ======================================================

|

||||

# Event bindings

|

||||

# ======================================================

|

||||

generate_btn.click(

|

||||

fn=generate_and_evaluate_data,

|

||||

inputs=[system_prompt_input, user_prompt_input, temp_dir_state, reference_input, model_select],

|

||||

outputs=[output_df, download_csv, evaluation_df, generated_state, images_gallery]

|

||||

)

|

||||

|

||||

reference_input.change(

|

||||

fn=display_reference_csv,

|

||||

inputs=[reference_input],

|

||||

outputs=[reference_display]

|

||||

)

|

||||

|

||||

demo.launch(debug=True)

|

||||

@@ -0,0 +1,16 @@

|

||||

Name,Age,City

|

||||

John,32,New York

|

||||

Alice,45,Los Angeles

|

||||

Bob,28,Chicago

|

||||

Eve,35,Houston

|

||||

Mike,52,Philadelphia

|

||||

Emma,29,San Antonio

|

||||

Oliver,39,Phoenix

|

||||

Isabella,48,San Diego

|

||||

William,55,Dallas

|

||||

Charlotte,31,San Jose

|

||||

Alexander,42,San Francisco

|

||||

Harper,38,San Antonio

|

||||

Julia,46,San Diego

|

||||

Ethan,53,San Jose

|

||||

Ava,29,San Francisco

|

||||

|

@@ -0,0 +1,99 @@

|

||||

,Comment,sentiment

|

||||

0,"Them: I don't think I like this game.

|

||||

|

||||

Me: But you haven't even played it for 5 minutes and are still in the tutorial.",negative

|

||||

1,Then you leave them to farm the smaller creatures while you either wait or help them kill them all with the click of a button.,negative

|

||||

2,Nothing beats the feeling you get when you see them fall in love with it just like you did all those years ago,positive

|

||||

3,"[Also, they're made of paper](https://i.imgur.com/wYu0G9J.jpg)

|

||||

|

||||

Edit: I tried to make a gif and failed so here's a [video](https://i.imgur.com/aPzS8Ny.mp4)",negative

|

||||

4,"Haha... That was exactly it when my brother tried to get me into WoW.

|

||||

|

||||

Him, "" I can run you through raids to get you to level up faster and get better gear. But first you need to be this min level. What are you""

|

||||

|

||||

Me ""lvl 1"".

|

||||

|

||||

Him ""ok. Let's do a couple quests to get you up. What is your quest""

|

||||

|

||||

Me ""collect 20 apples"".",positive

|

||||

5,I'm going through this right now. I just started playing minecraft for the first time and my SO is having to walk me through everything.,positive

|

||||

6,Then they get even more into it than you and end up getting all the loot and items you wanted before you. They make you look like the noob in about 3 months.,positive

|

||||

7,"###Take your time, you got this

|

||||

|#|user|EDIT|comment|Link

|

||||

|:--|:--|:--|:--|:--|

|

||||

|0|/u/KiwiChoppa147|[EDIT](https://i.imgur.com/OI8jNtE.png)|Then you leave them to farm the smaller creatures while you either wait or help them kill them all with the click of a button.|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etor3t2/)|

|

||||

|1|/u/League0fGaming|[EDIT](https://i.imgur.com/5uvRAYy.png)|Nothing beats the feeling you get when you see them fall in love with it just like you did all those years ago|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etor371/)|

|

||||

|2|/u/DeJMan|[EDIT](https://i.imgur.com/3FL3IFb.png)|[Also, they're made of paper](https://i.imgur.com/wYu0G9J.jpg) Edit: I tried to make a gif and failed so here's a [video](https://i.imgur.com/aPzS8Ny.mp4)|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etos1ic/)|

|

||||

|3|/u/Bamboo6|[EDIT](https://i.imgur.com/SiDFZxQ.png)|Haha... That was exactly it when my brother tried to get me into WoW. Him, "" I can run you through raids to get you to level up faster and get better gear. But first you need to be this min level. What are you"" Me ""lvl 1"". Him ""ok. Let's do a couple quests to get you up. What is your quest"" Me ""collect 20 apples"".|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etorb6s/)|

|

||||

|4|/u/xxfisharemykidsxx|[EDIT](https://i.imgur.com/3ek9F93.png)|I'm going through this right now. I just started playing minecraft for the first time and my SO is having to walk me through everything.|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etor7hk/)|

|

||||

|5|/u/DuckSeeDuckWorld|[EDIT](https://i.imgur.com/rlE6VFP.png)|[This is my last EDIT before I go to camp for a week](https://imgur.com/xoOWF6K)|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etorpvh/)|

|

||||

|6|/u/ChecksUsernames|[EDIT](https://i.imgur.com/6Wc56ec.png)|What the hell you have your own edit bot?!|[Link](/r/gaming/comments/ccr8c8/take_your_time_you_got_this/etotc4w/)|

|

||||

|

||||

|

||||

I am a little fan-made bot who loves /u/SrGrafo but is a little lazy with hunting for EDITs. If you want to support our great creator, check out his [Patreon](https://Patreon.com/SrGrafo)",positive

|

||||

8,"Them: ""Wait, where did you go?""

|

||||

|

||||

Me --cleaning up the vast quantities of mobs they've managed to stumble past: "" Oh just, you know, letting you get a feel for navigation.""",neutral

|

||||

9,"Don't mind the arrows, everything's fine",positive

|

||||

10,[me_irl](https://i.imgur.com/eRPb2X3.png),neutral

|

||||

11,"I usually teach them the basic controls, and then throw them to the wolves like Spartans. Its sink or swim now!",positive

|

||||

12,This is Warframe in a nutshell,neutral

|

||||

13,[I love guiding people trough the game for the First time](https://imgur.com/uep20iB),positive

|

||||

14,[showing a video game to my nephew for the first time didn't go that well :D](https://i.imgur.com/dQf4mfI.png),negative

|

||||

15,[When it's a puzzle game](https://i.imgur.com/BgLqzRa.png),neutral

|

||||

16,"I love SrGrafo’s cheeky smiles in his drawings.

|

||||

|

||||

Also, I wonder if it’s Senior Grafo, Señor Grafo, or Sir Grafo.",positive

|

||||

17,"https://i.redd.it/pqjza65wrd711.jpg

|

||||

|

||||

Same look.",neutral

|

||||

18,[This is my last EDIT before I go to camp for a week](https://imgur.com/xoOWF6K),neutral

|

||||

19,Haha this is me in Warframe but I've only been playing for a year. It's so easy to find beginners and they always need help with something.,positive

|

||||

20,This happens all the time on r/warframe ! Helping new people is like a whole part of the game's fun.,positive

|

||||

21,[deleted],neutral

|

||||

22,"Once day when I have kids, I hope I can do the same with them",positive

|

||||

23,WAIT NO. WHY'D YOU PRESS X INSTEAD? Now you just used the only consumable for the next like 3 stages. Here lemme just restart from your last save...,neutral

|

||||

24,Big gamer energy.,positive

|

||||

25,"What about ten minutes in and they say “I’m not sure I get what’s going on. Eh I’m bored.”

|

||||

|

||||

Shitty phone [EDIT](https://imgur.com/a/zr4Ahnp)",negative

|

||||

26,Press *alt+f4* for the special move,positive

|

||||

27,"I remember teaching my little brother everything about Minecraft. Ah, good times. Now he's a little prick xD",positive

|

||||

28,2nd top post of 2019!! \(^0^)/,positive

|

||||

29,"With Grafo’s most recent comics, this achievement means so much more now. Check them out on his profile, u/SrGrafo, they’re titled “SrGrafo’s inception “",neutral

|

||||

30,"this is my bf showing me wow.

|

||||

|

||||

Him: “You can’t just stand there and take damage.”

|

||||

Me: “but I can’t move fast and my spells get cancelled.”

|

||||

|

||||

*proceeds to die 5 times in a row.*

|

||||

|

||||

and then he finishes it for me after watching me fail.

|

||||

|

||||

Me: yay. 😀😀",neutral

|

||||

31,"Quick cross over

|

||||

|

||||

https://imgur.com/a/9y4JVAr",neutral

|

||||

32,"Man, I really enjoy encoutering nice Veterans in online games",positive

|

||||

33,Wow. This is my first time here before the edits.,positive

|

||||

34,So this is the most liked Reddit post hmm,positive

|

||||

35,Diamond armor? Really?,positive

|

||||

36,"I remember when I was playing Destiny and I was pretty low level, having fun going through the missions, then my super high level friend joined. It was really unfun because he was slaughtering everything for me while I sat at the back doing jackshit",positive

|

||||

37,"""I'll just use this character until you get the hang of things and then swap to an alt so we can level together""",neutral

|

||||

38,"My girlfriend often just doesn't get why I love the games I play, but that's fine. I made sure to sit and watch her while she fell in love with breath of the wild.",negative

|

||||

39,"Warframe was full of people like this last i was on and its amazing. I was one of them too, but mostly for advice more than items because i was broke constantly.",neutral

|

||||

40,This is the most upvoted post I've seen on Reddit. And it was unexpectedly touching :),positive

|

||||

41,220k. holy moly,neutral

|

||||

42,Last,neutral

|

||||

43,"170k+ upvotes in 11 hours.

|

||||

Is this a record?",neutral

|

||||

44,This is the top post of all time😱,positive

|

||||

45,"Congratulations, 2nd post of the Year",positive

|

||||

46,Most liked post on reddit,positive

|

||||

47,Absolute Unit,neutral

|

||||

48,"I did similar things in Monster Hunter World.

|

||||

The only problem is they would never play ever again and play other games like Fortnite...feels bad man.

|

||||

If you ever get interested on playing the game u/SrGrafo then I’ll teach you the ways of the hunter!!! (For real tho it’s a really good game and better with buddy’s!)",positive

|

||||

49,Congrats on the second most upvoted post of 2019 my guy.,positive

|

||||

50,"This was it with my brother when I first started playing POE. He made it soooo much easier to get into the game. To understand the gameplay and mechanics. I think I’d have left in a day or two had it not been for him

|

||||

And walking me through the first few missions lmao. u/sulphra_",positive

|

||||

|

@@ -0,0 +1,159 @@

|

||||

fixed acidity,volatile acidity,citric acid,residual sugar,chlorides,free sulfur dioxide,total sulfur dioxide,density,pH,sulphates,alcohol,quality,Id

|

||||

7.4,0.7,0.0,1.9,0.076,11.0,34.0,0.9978,3.51,0.56,9.4,5,0

|

||||

7.8,0.88,0.0,2.6,0.098,25.0,67.0,0.9968,3.2,0.68,9.8,5,1

|

||||

7.8,0.76,0.04,2.3,0.092,15.0,54.0,0.997,3.26,0.65,9.8,5,2

|

||||

11.2,0.28,0.56,1.9,0.075,17.0,60.0,0.998,3.16,0.58,9.8,6,3

|

||||

7.4,0.7,0.0,1.9,0.076,11.0,34.0,0.9978,3.51,0.56,9.4,5,4

|

||||

7.4,0.66,0.0,1.8,0.075,13.0,40.0,0.9978,3.51,0.56,9.4,5,5

|

||||

7.9,0.6,0.06,1.6,0.069,15.0,59.0,0.9964,3.3,0.46,9.4,5,6

|

||||

7.3,0.65,0.0,1.2,0.065,15.0,21.0,0.9946,3.39,0.47,10.0,7,7

|

||||

7.8,0.58,0.02,2.0,0.073,9.0,18.0,0.9968,3.36,0.57,9.5,7,8

|

||||

6.7,0.58,0.08,1.8,0.09699999999999999,15.0,65.0,0.9959,3.28,0.54,9.2,5,10

|

||||

5.6,0.615,0.0,1.6,0.08900000000000001,16.0,59.0,0.9943,3.58,0.52,9.9,5,12

|

||||

7.8,0.61,0.29,1.6,0.114,9.0,29.0,0.9974,3.26,1.56,9.1,5,13

|

||||

8.5,0.28,0.56,1.8,0.092,35.0,103.0,0.9969,3.3,0.75,10.5,7,16

|

||||

7.9,0.32,0.51,1.8,0.341,17.0,56.0,0.9969,3.04,1.08,9.2,6,19

|

||||

7.6,0.39,0.31,2.3,0.08199999999999999,23.0,71.0,0.9982,3.52,0.65,9.7,5,21

|

||||

7.9,0.43,0.21,1.6,0.106,10.0,37.0,0.9966,3.17,0.91,9.5,5,22

|

||||

8.5,0.49,0.11,2.3,0.084,9.0,67.0,0.9968,3.17,0.53,9.4,5,23

|

||||

6.9,0.4,0.14,2.4,0.085,21.0,40.0,0.9968,3.43,0.63,9.7,6,24

|

||||

6.3,0.39,0.16,1.4,0.08,11.0,23.0,0.9955,3.34,0.56,9.3,5,25

|

||||

7.6,0.41,0.24,1.8,0.08,4.0,11.0,0.9962,3.28,0.59,9.5,5,26

|

||||

7.1,0.71,0.0,1.9,0.08,14.0,35.0,0.9972,3.47,0.55,9.4,5,28

|

||||

7.8,0.645,0.0,2.0,0.08199999999999999,8.0,16.0,0.9964,3.38,0.59,9.8,6,29

|

||||

6.7,0.675,0.07,2.4,0.08900000000000001,17.0,82.0,0.9958,3.35,0.54,10.1,5,30

|

||||

8.3,0.655,0.12,2.3,0.083,15.0,113.0,0.9966,3.17,0.66,9.8,5,32

|

||||

5.2,0.32,0.25,1.8,0.10300000000000001,13.0,50.0,0.9957,3.38,0.55,9.2,5,34

|

||||

7.8,0.645,0.0,5.5,0.086,5.0,18.0,0.9986,3.4,0.55,9.6,6,35

|

||||

7.8,0.6,0.14,2.4,0.086,3.0,15.0,0.9975,3.42,0.6,10.8,6,36

|

||||

8.1,0.38,0.28,2.1,0.066,13.0,30.0,0.9968,3.23,0.73,9.7,7,37

|

||||

7.3,0.45,0.36,5.9,0.07400000000000001,12.0,87.0,0.9978,3.33,0.83,10.5,5,40

|

||||

8.8,0.61,0.3,2.8,0.08800000000000001,17.0,46.0,0.9976,3.26,0.51,9.3,4,41

|

||||

7.5,0.49,0.2,2.6,0.332,8.0,14.0,0.9968,3.21,0.9,10.5,6,42

|

||||

8.1,0.66,0.22,2.2,0.069,9.0,23.0,0.9968,3.3,1.2,10.3,5,43

|

||||

4.6,0.52,0.15,2.1,0.054000000000000006,8.0,65.0,0.9934,3.9,0.56,13.1,4,45

|

||||

7.7,0.935,0.43,2.2,0.114,22.0,114.0,0.997,3.25,0.73,9.2,5,46

|

||||

8.8,0.66,0.26,1.7,0.07400000000000001,4.0,23.0,0.9971,3.15,0.74,9.2,5,50

|

||||

6.6,0.52,0.04,2.2,0.069,8.0,15.0,0.9956,3.4,0.63,9.4,6,51

|

||||

6.6,0.5,0.04,2.1,0.068,6.0,14.0,0.9955,3.39,0.64,9.4,6,52

|

||||

8.6,0.38,0.36,3.0,0.081,30.0,119.0,0.997,3.2,0.56,9.4,5,53

|

||||

7.6,0.51,0.15,2.8,0.11,33.0,73.0,0.9955,3.17,0.63,10.2,6,54

|

||||

10.2,0.42,0.57,3.4,0.07,4.0,10.0,0.9971,3.04,0.63,9.6,5,56

|

||||

7.8,0.59,0.18,2.3,0.076,17.0,54.0,0.9975,3.43,0.59,10.0,5,58

|

||||

7.3,0.39,0.31,2.4,0.07400000000000001,9.0,46.0,0.9962,3.41,0.54,9.4,6,59

|

||||

8.8,0.4,0.4,2.2,0.079,19.0,52.0,0.998,3.44,0.64,9.2,5,60

|

||||

7.7,0.69,0.49,1.8,0.115,20.0,112.0,0.9968,3.21,0.71,9.3,5,61

|

||||

7.0,0.735,0.05,2.0,0.081,13.0,54.0,0.9966,3.39,0.57,9.8,5,63

|

||||

7.2,0.725,0.05,4.65,0.086,4.0,11.0,0.9962,3.41,0.39,10.9,5,64

|

||||

7.2,0.725,0.05,4.65,0.086,4.0,11.0,0.9962,3.41,0.39,10.9,5,65

|

||||

6.6,0.705,0.07,1.6,0.076,6.0,15.0,0.9962,3.44,0.58,10.7,5,67

|

||||

8.0,0.705,0.05,1.9,0.07400000000000001,8.0,19.0,0.9962,3.34,0.95,10.5,6,69

|

||||

7.7,0.69,0.22,1.9,0.084,18.0,94.0,0.9961,3.31,0.48,9.5,5,72

|

||||

8.3,0.675,0.26,2.1,0.084,11.0,43.0,0.9976,3.31,0.53,9.2,4,73

|

||||

8.8,0.41,0.64,2.2,0.09300000000000001,9.0,42.0,0.9986,3.54,0.66,10.5,5,76

|

||||

6.8,0.785,0.0,2.4,0.10400000000000001,14.0,30.0,0.9966,3.52,0.55,10.7,6,77

|

||||

6.7,0.75,0.12,2.0,0.086,12.0,80.0,0.9958,3.38,0.52,10.1,5,78

|

||||

8.3,0.625,0.2,1.5,0.08,27.0,119.0,0.9972,3.16,1.12,9.1,4,79

|

||||

6.2,0.45,0.2,1.6,0.069,3.0,15.0,0.9958,3.41,0.56,9.2,5,80

|

||||

7.4,0.5,0.47,2.0,0.086,21.0,73.0,0.997,3.36,0.57,9.1,5,82

|

||||

6.3,0.3,0.48,1.8,0.069,18.0,61.0,0.9959,3.44,0.78,10.3,6,84

|

||||

6.9,0.55,0.15,2.2,0.076,19.0,40.0,0.9961,3.41,0.59,10.1,5,85

|

||||

8.6,0.49,0.28,1.9,0.11,20.0,136.0,0.9972,2.93,1.95,9.9,6,86

|

||||

7.7,0.49,0.26,1.9,0.062,9.0,31.0,0.9966,3.39,0.64,9.6,5,87

|

||||

9.3,0.39,0.44,2.1,0.107,34.0,125.0,0.9978,3.14,1.22,9.5,5,88

|

||||

7.0,0.62,0.08,1.8,0.076,8.0,24.0,0.9978,3.48,0.53,9.0,5,89

|

||||

7.9,0.52,0.26,1.9,0.079,42.0,140.0,0.9964,3.23,0.54,9.5,5,90

|

||||

8.6,0.49,0.28,1.9,0.11,20.0,136.0,0.9972,2.93,1.95,9.9,6,91

|

||||

7.7,0.49,0.26,1.9,0.062,9.0,31.0,0.9966,3.39,0.64,9.6,5,93

|

||||

5.0,1.02,0.04,1.4,0.045,41.0,85.0,0.9938,3.75,0.48,10.5,4,94

|

||||

6.8,0.775,0.0,3.0,0.102,8.0,23.0,0.9965,3.45,0.56,10.7,5,96

|

||||

7.6,0.9,0.06,2.5,0.079,5.0,10.0,0.9967,3.39,0.56,9.8,5,98

|

||||

8.1,0.545,0.18,1.9,0.08,13.0,35.0,0.9972,3.3,0.59,9.0,6,99

|

||||

8.3,0.61,0.3,2.1,0.084,11.0,50.0,0.9972,3.4,0.61,10.2,6,100

|

||||

8.1,0.545,0.18,1.9,0.08,13.0,35.0,0.9972,3.3,0.59,9.0,6,102

|

||||

8.1,0.575,0.22,2.1,0.077,12.0,65.0,0.9967,3.29,0.51,9.2,5,103

|

||||

7.2,0.49,0.24,2.2,0.07,5.0,36.0,0.996,3.33,0.48,9.4,5,104

|

||||

8.1,0.575,0.22,2.1,0.077,12.0,65.0,0.9967,3.29,0.51,9.2,5,105

|

||||

7.8,0.41,0.68,1.7,0.467,18.0,69.0,0.9973,3.08,1.31,9.3,5,106

|

||||

6.2,0.63,0.31,1.7,0.08800000000000001,15.0,64.0,0.9969,3.46,0.79,9.3,5,107

|

||||

7.8,0.56,0.19,1.8,0.10400000000000001,12.0,47.0,0.9964,3.19,0.93,9.5,5,110

|

||||

8.4,0.62,0.09,2.2,0.084,11.0,108.0,0.9964,3.15,0.66,9.8,5,111

|

||||

10.1,0.31,0.44,2.3,0.08,22.0,46.0,0.9988,3.32,0.67,9.7,6,113

|

||||

7.8,0.56,0.19,1.8,0.10400000000000001,12.0,47.0,0.9964,3.19,0.93,9.5,5,114

|

||||

9.4,0.4,0.31,2.2,0.09,13.0,62.0,0.9966,3.07,0.63,10.5,6,115

|

||||

8.3,0.54,0.28,1.9,0.077,11.0,40.0,0.9978,3.39,0.61,10.0,6,116

|

||||

7.3,1.07,0.09,1.7,0.17800000000000002,10.0,89.0,0.9962,3.3,0.57,9.0,5,120

|

||||

8.8,0.55,0.04,2.2,0.11900000000000001,14.0,56.0,0.9962,3.21,0.6,10.9,6,121

|

||||

7.3,0.695,0.0,2.5,0.075,3.0,13.0,0.998,3.49,0.52,9.2,5,122

|

||||

7.8,0.5,0.17,1.6,0.08199999999999999,21.0,102.0,0.996,3.39,0.48,9.5,5,124

|

||||

8.2,1.33,0.0,1.7,0.081,3.0,12.0,0.9964,3.53,0.49,10.9,5,126

|

||||

8.1,1.33,0.0,1.8,0.08199999999999999,3.0,12.0,0.9964,3.54,0.48,10.9,5,127

|

||||

8.0,0.59,0.16,1.8,0.065,3.0,16.0,0.9962,3.42,0.92,10.5,7,128

|

||||

8.0,0.745,0.56,2.0,0.11800000000000001,30.0,134.0,0.9968,3.24,0.66,9.4,5,130

|

||||

5.6,0.5,0.09,2.3,0.049,17.0,99.0,0.9937,3.63,0.63,13.0,5,131

|

||||

7.9,1.04,0.05,2.2,0.084,13.0,29.0,0.9959,3.22,0.55,9.9,6,134

|

||||

8.4,0.745,0.11,1.9,0.09,16.0,63.0,0.9965,3.19,0.82,9.6,5,135

|

||||

7.2,0.415,0.36,2.0,0.081,13.0,45.0,0.9972,3.48,0.64,9.2,5,137

|

||||

8.4,0.745,0.11,1.9,0.09,16.0,63.0,0.9965,3.19,0.82,9.6,5,140

|

||||

5.2,0.34,0.0,1.8,0.05,27.0,63.0,0.9916,3.68,0.79,14.0,6,142

|

||||

6.3,0.39,0.08,1.7,0.066,3.0,20.0,0.9954,3.34,0.58,9.4,5,143

|

||||

5.2,0.34,0.0,1.8,0.05,27.0,63.0,0.9916,3.68,0.79,14.0,6,144

|

||||

8.1,0.67,0.55,1.8,0.11699999999999999,32.0,141.0,0.9968,3.17,0.62,9.4,5,145

|

||||

5.8,0.68,0.02,1.8,0.087,21.0,94.0,0.9944,3.54,0.52,10.0,5,146

|

||||

6.9,0.49,0.1,2.3,0.07400000000000001,12.0,30.0,0.9959,3.42,0.58,10.2,6,148

|

||||

7.3,0.33,0.47,2.1,0.077,5.0,11.0,0.9958,3.33,0.53,10.3,6,150

|

||||

9.2,0.52,1.0,3.4,0.61,32.0,69.0,0.9996,2.74,2.0,9.4,4,151

|

||||

7.5,0.6,0.03,1.8,0.095,25.0,99.0,0.995,3.35,0.54,10.1,5,152

|

||||

7.5,0.6,0.03,1.8,0.095,25.0,99.0,0.995,3.35,0.54,10.1,5,153

|

||||

7.1,0.43,0.42,5.5,0.071,28.0,128.0,0.9973,3.42,0.71,10.5,5,155

|

||||

7.1,0.43,0.42,5.5,0.07,29.0,129.0,0.9973,3.42,0.72,10.5,5,156

|

||||

7.1,0.43,0.42,5.5,0.071,28.0,128.0,0.9973,3.42,0.71,10.5,5,157

|

||||

7.1,0.68,0.0,2.2,0.073,12.0,22.0,0.9969,3.48,0.5,9.3,5,158

|

||||

6.8,0.6,0.18,1.9,0.079,18.0,86.0,0.9968,3.59,0.57,9.3,6,159

|

||||

7.6,0.95,0.03,2.0,0.09,7.0,20.0,0.9959,3.2,0.56,9.6,5,160

|

||||

7.6,0.68,0.02,1.3,0.07200000000000001,9.0,20.0,0.9965,3.17,1.08,9.2,4,161

|

||||

7.8,0.53,0.04,1.7,0.076,17.0,31.0,0.9964,3.33,0.56,10.0,6,162

|

||||

7.4,0.6,0.26,7.3,0.07,36.0,121.0,0.9982,3.37,0.49,9.4,5,163

|

||||

7.3,0.59,0.26,7.2,0.07,35.0,121.0,0.9981,3.37,0.49,9.4,5,164

|

||||

7.8,0.63,0.48,1.7,0.1,14.0,96.0,0.9961,3.19,0.62,9.5,5,165

|

||||

6.8,0.64,0.1,2.1,0.085,18.0,101.0,0.9956,3.34,0.52,10.2,5,166

|

||||

7.3,0.55,0.03,1.6,0.07200000000000001,17.0,42.0,0.9956,3.37,0.48,9.0,4,167

|

||||

6.8,0.63,0.07,2.1,0.08900000000000001,11.0,44.0,0.9953,3.47,0.55,10.4,6,168

|

||||

7.9,0.885,0.03,1.8,0.057999999999999996,4.0,8.0,0.9972,3.36,0.33,9.1,4,170

|

||||

8.0,0.42,0.17,2.0,0.073,6.0,18.0,0.9972,3.29,0.61,9.2,6,172

|

||||

7.4,0.62,0.05,1.9,0.068,24.0,42.0,0.9961,3.42,0.57,11.5,6,173

|

||||

6.9,0.5,0.04,1.5,0.085,19.0,49.0,0.9958,3.35,0.78,9.5,5,175

|

||||

7.3,0.38,0.21,2.0,0.08,7.0,35.0,0.9961,3.33,0.47,9.5,5,176

|

||||

7.5,0.52,0.42,2.3,0.087,8.0,38.0,0.9972,3.58,0.61,10.5,6,177

|

||||

7.0,0.805,0.0,2.5,0.068,7.0,20.0,0.9969,3.48,0.56,9.6,5,178

|

||||

8.8,0.61,0.14,2.4,0.067,10.0,42.0,0.9969,3.19,0.59,9.5,5,179

|

||||

8.8,0.61,0.14,2.4,0.067,10.0,42.0,0.9969,3.19,0.59,9.5,5,180

|

||||

8.9,0.61,0.49,2.0,0.27,23.0,110.0,0.9972,3.12,1.02,9.3,5,181

|

||||

7.2,0.73,0.02,2.5,0.076,16.0,42.0,0.9972,3.44,0.52,9.3,5,182

|

||||

6.8,0.61,0.2,1.8,0.077,11.0,65.0,0.9971,3.54,0.58,9.3,5,183

|

||||

6.7,0.62,0.21,1.9,0.079,8.0,62.0,0.997,3.52,0.58,9.3,6,184

|

||||

8.9,0.31,0.57,2.0,0.111,26.0,85.0,0.9971,3.26,0.53,9.7,5,185

|

||||

7.4,0.39,0.48,2.0,0.08199999999999999,14.0,67.0,0.9972,3.34,0.55,9.2,5,186

|

||||

7.9,0.5,0.33,2.0,0.084,15.0,143.0,0.9968,3.2,0.55,9.5,5,188

|

||||

8.2,0.5,0.35,2.9,0.077,21.0,127.0,0.9976,3.23,0.62,9.4,5,190

|

||||

6.4,0.37,0.25,1.9,0.07400000000000001,21.0,49.0,0.9974,3.57,0.62,9.8,6,191

|

||||

7.6,0.55,0.21,2.2,0.071,7.0,28.0,0.9964,3.28,0.55,9.7,5,193

|

||||

7.6,0.55,0.21,2.2,0.071,7.0,28.0,0.9964,3.28,0.55,9.7,5,194

|

||||

7.3,0.58,0.3,2.4,0.07400000000000001,15.0,55.0,0.9968,3.46,0.59,10.2,5,196

|

||||

11.5,0.3,0.6,2.0,0.067,12.0,27.0,0.9981,3.11,0.97,10.1,6,197

|

||||

6.9,1.09,0.06,2.1,0.061,12.0,31.0,0.9948,3.51,0.43,11.4,4,199

|

||||

9.6,0.32,0.47,1.4,0.055999999999999994,9.0,24.0,0.99695,3.22,0.82,10.3,7,200

|

||||

7.0,0.43,0.36,1.6,0.08900000000000001,14.0,37.0,0.99615,3.34,0.56,9.2,6,204

|

||||

12.8,0.3,0.74,2.6,0.095,9.0,28.0,0.9994,3.2,0.77,10.8,7,205

|

||||

12.8,0.3,0.74,2.6,0.095,9.0,28.0,0.9994,3.2,0.77,10.8,7,206

|

||||

7.8,0.44,0.28,2.7,0.1,18.0,95.0,0.9966,3.22,0.67,9.4,5,208

|

||||

9.7,0.53,0.6,2.0,0.039,5.0,19.0,0.99585,3.3,0.86,12.4,6,210

|

||||

8.0,0.725,0.24,2.8,0.083,10.0,62.0,0.99685,3.35,0.56,10.0,6,211

|

||||

8.2,0.57,0.26,2.2,0.06,28.0,65.0,0.9959,3.3,0.43,10.1,5,213

|

||||

7.8,0.735,0.08,2.4,0.092,10.0,41.0,0.9974,3.24,0.71,9.8,6,214

|

||||

7.0,0.49,0.49,5.6,0.06,26.0,121.0,0.9974,3.34,0.76,10.5,5,215

|

||||

8.7,0.625,0.16,2.0,0.10099999999999999,13.0,49.0,0.9962,3.14,0.57,11.0,5,216

|

||||

8.1,0.725,0.22,2.2,0.07200000000000001,11.0,41.0,0.9967,3.36,0.55,9.1,5,217

|

||||

7.5,0.49,0.19,1.9,0.076,10.0,44.0,0.9957,3.39,0.54,9.7,5,218

|

||||

7.8,0.34,0.37,2.0,0.08199999999999999,24.0,58.0,0.9964,3.34,0.59,9.4,6,220

|

||||

7.4,0.53,0.26,2.0,0.10099999999999999,16.0,72.0,0.9957,3.15,0.57,9.4,5,221

|

||||

|

@@ -0,0 +1,292 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"id": "63356928",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Initial Note\n",

|

||||

"After running experiments in Colab using open-source models from Hugging Face, I decided to do the exercise with OpenAI. The reason is that Llama 3.2 frequently did not follow the prompts correctly, leading to inconsistencies and poor performance. Additionally, using larger models significantly increased processing time, making them less practical for this task.\n",

|

||||

"\n",

|

||||

"The code from this notebook will be reorganized in modules for the final Demo."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"id": "5c12f081",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Module to generate syntethic data"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "2389d798",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"\n",

|

||||

"import re \n",

|

||||

"\n",

|

||||

"def _clean_json_output(raw_text: str) -> str:\n",

|

||||

" \"\"\"\n",

|

||||

" Limpia la salida de OpenAI para convertirla en JSON válido:\n",

|

||||

" - Mantiene las comillas de claves sin tocar.\n",

|

||||

" - Escapa solo las comillas dobles dentro de los strings de valores.\n",

|

||||

" - Escapa \\n, \\r, \\t.\n",

|

||||

" - Remueve code fences y HTML.\n",

|

||||

" - Asegura que el array comience con [ y termine con ].\n",

|

||||

" - Elimina comas finales.\n",

|

||||

" \"\"\"\n",

|

||||

" text = raw_text.strip()\n",

|

||||

" \n",

|

||||

" # Remover code fences y HTML\n",

|

||||

" text = re.sub(r\"```(?:json)?\", \"\", text)\n",

|

||||

" text = re.sub(r\"</?[^>]+>\", \"\", text)\n",

|

||||

" \n",

|

||||

" # Escapar comillas dobles dentro de valores de Comment\n",

|

||||

" def escape_quotes_in_values(match):\n",

|

||||

" value = match.group(1)\n",

|

||||

" value = value.replace('\"', r'\\\"') # solo dentro del valor\n",

|

||||

" value = value.replace('\\n', r'\\n').replace('\\r', r'\\r').replace('\\t', r'\\t')\n",

|

||||

" return f'\"{value}\"'\n",

|

||||

" \n",

|

||||

" text = re.sub(r'\"(.*?)\"', escape_quotes_in_values, text)\n",

|

||||

" \n",

|

||||

" # Asegurar que empieza y termina con []\n",

|

||||

" if not text.startswith('['):\n",

|

||||

" text = '[' + text\n",

|

||||

" if not text.endswith(']'):\n",

|

||||

" text += ']'\n",

|

||||

" \n",

|

||||

" # Eliminar comas finales antes de cerrar corchetes\n",

|

||||

" text = re.sub(r',\\s*]', ']', text)\n",

|

||||

" \n",

|

||||

" return text\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "75bfad6f",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import pandas as pd\n",

|

||||

"import json\n",

|

||||

"import openai\n",

|

||||

"import tempfile\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"def generate_synthetic_data_openai(\n",

|

||||

" system_prompt: str,\n",

|

||||

" user_prompt: str,\n",

|

||||

" reference_file=None,\n",

|

||||

" openai_model=\"gpt-4o-mini\",\n",

|

||||

" max_tokens=2048,\n",

|

||||

" temperature=0.0\n",

|

||||

"):\n",

|

||||

" \"\"\"\n",

|

||||

" Genera datos sintéticos y devuelve el DataFrame y la ruta de un CSV temporal.\n",

|

||||

" \"\"\"\n",

|

||||

" # Preparar prompt completo\n",

|

||||

" if reference_file:\n",

|

||||

" if isinstance(reference_file, str):\n",

|

||||

" df_ref = pd.read_csv(reference_file)\n",

|

||||

" else:\n",

|

||||

" df_ref = pd.read_csv(reference_file)\n",

|

||||

" reference_data = df_ref.to_dict(orient=\"records\")\n",

|

||||

" user_prompt_full = (\n",

|

||||

" f\"{user_prompt}\\nFollow the structure and distribution of the reference data, \"\n",

|

||||

" f\"but do NOT copy any exact values:\\n{reference_data}\"\n",

|

||||

" )\n",

|

||||

" else:\n",

|

||||

" user_prompt_full = user_prompt\n",

|

||||

"\n",

|

||||

" # Llamar a OpenAI\n",

|

||||

" response = openai.chat.completions.create(\n",

|

||||

" model=openai_model,\n",

|

||||

" messages=[\n",

|

||||

" {\"role\": \"system\", \"content\": system_prompt},\n",

|

||||

" {\"role\": \"user\", \"content\": user_prompt_full},\n",

|

||||

" ],\n",

|

||||

" temperature=temperature,\n",

|

||||

" max_tokens=max_tokens,\n",

|

||||

" )\n",

|

||||

"\n",

|

||||

" raw_text = response.choices[0].message.content\n",

|

||||

" cleaned_json = _clean_json_output(raw_text)\n",

|

||||

"\n",

|

||||

" # Parsear JSON\n",

|

||||

" try:\n",

|

||||

" data = json.loads(cleaned_json)\n",

|

||||

" except json.JSONDecodeError as e:\n",

|

||||

" raise ValueError(f\"JSON inválido generado. Error: {e}\\nOutput truncado: {cleaned_json[:500]}\")\n",

|

||||

"\n",

|

||||

" df = pd.DataFrame(data)\n",

|

||||

"\n",

|

||||

" # Guardar CSV temporal\n",

|

||||

" tmp_file = tempfile.NamedTemporaryFile(delete=False, suffix=\".csv\")\n",

|

||||

" df.to_csv(tmp_file.name, index=False)\n",

|

||||

" tmp_file.close()\n",

|

||||

"\n",

|

||||

" return df, tmp_file.name\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"id": "91af1eb5",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Default prompts"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "792d1555",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"SYSTEM_PROMPT = \"\"\"\n",

|

||||

"You are a precise synthetic data generator. Your only task is to output valid JSON arrays of dictionaries.\n",

|

||||

"\n",

|

||||

"Rules:\n",

|

||||

"1. Output a single JSON array starting with '[' and ending with ']'.\n",

|

||||

"2. Do not include markdown, code fences, or explanatory text — only the JSON.\n",

|

||||

"3. Keep all columns exactly as specified; do not add or remove fields (index must be omitted).\n",

|

||||

"4. Respect data types: text, number, date, boolean, etc.\n",

|

||||

"5. Ensure internal consistency and realistic variation.\n",

|

||||

"6. If a reference table is provided, generate data with similar statistical distributions for numerical and categorical variables, \n",

|

||||

" but never copy exact rows. Each row must be independent and new.\n",

|

||||

"7. For personal information (names, ages, addresses, IDs), ensure diversity and realism — individual values may be reused to maintain realism, \n",

|

||||

" but never reuse or slightly modify entire reference rows.\n",

|

||||

"8. Escape all internal double quotes in strings with a backslash (\\\").\n",

|

||||

"9. Replace any single quotes in strings with double quotes.\n",

|

||||

"10. Escape newline (\\n), tab (\\t), or carriage return (\\r) characters as \\\\n, \\\\t, \\\\r inside strings.\n",

|

||||

"11. Remove any trailing commas before closing brackets.\n",

|

||||

"12. Do not include any reference data or notes about it in the output.\n",

|

||||

"13. The output must always be valid JSON parseable by standard JSON parsers.\n",

|

||||

"\"\"\"\n",

|

||||

"\n",

|

||||

"USER_PROMPT = \"\"\"\n",

|

||||

"Generate exactly 15 rows of synthetic data following all the rules above. \n",

|

||||

"Ensure that all strings are safe for JSON parsing and ready to convert to a pandas DataFrame.\n",

|

||||

"\"\"\"\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"id": "6f9331fa",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Test"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"id": "d38f0afb",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"For testing our generator, we use the first 50 examples of reddit gaming comments with sentiments dataset.\n",

|

||||

"Source: https://www.kaggle.com/datasets/sainitishmitta04/23k-reddit-gaming-comments-with-sentiments-dataset"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "78d94faa",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"\n",

|

||||

"df, _ = generate_synthetic_data_openai(SYSTEM_PROMPT, USER_PROMPT, reference_file= \"data/sentiment_reference.csv\")"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "0e6b5ebb",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"df"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "015a3110",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"print(df.Comment[0])"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"id": "0ef44876",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Gradio Demo"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"id": "aa4092f4",

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import gradio as gr\n",

|

||||

"\n",

|

||||

"with gr.Blocks() as demo:\n",

|

||||

" gr.Markdown(\"# 🧠 Synthetic Data Generator\")\n",

|

||||

"\n",

|

||||

" with gr.Row():\n",

|

||||

" system_prompt_input = gr.Textbox(label=\"System Prompt\", value=SYSTEM_PROMPT, lines=10)\n",

|

||||

"\n",

|

||||

" with gr.Row():\n",

|

||||

" user_prompt_input = gr.Textbox(label=\"User Prompt\", value=USER_PROMPT, lines=5)\n",

|

||||

"\n",

|

||||

" with gr.Row():\n",

|

||||

" reference_input = gr.File(label=\"Reference CSV (optional)\", file_types=[\".csv\"])\n",

|

||||

"\n",

|

||||

" output_df = gr.DataFrame(label=\"Generated Data\")\n",

|

||||

" download_csv = gr.File(label=\"Download CSV\")\n",

|

||||

"\n",

|

||||

" generate_btn = gr.Button(\"🚀 Generate Data\")\n",

|

||||

"\n",

|

||||

" generate_btn.click(\n",

|

||||

" fn=generate_synthetic_data_openai,\n",

|

||||

" inputs=[system_prompt_input, user_prompt_input, reference_input],\n",

|

||||

" outputs=[output_df, download_csv]\n",

|

||||

" )\n",

|

||||

"\n",

|

||||

"demo.launch(debug=True)\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"kernelspec": {

|

||||

"display_name": ".venv",

|

||||

"language": "python",

|

||||

"name": "python3"

|

||||

},

|

||||

"language_info": {

|

||||

"codemirror_mode": {

|

||||

"name": "ipython",

|

||||

"version": 3

|

||||

},

|

||||

"file_extension": ".py",

|

||||

"mimetype": "text/x-python",

|

||||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.12.12"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 5

|

||||

}

|

||||

@@ -0,0 +1,16 @@

|

||||

[project]

|

||||

name = "synthetic-data"

|

||||

version = "0.1.0"

|

||||

description = "An intelligent synthetic data generator using OpenAI models"

|

||||

authors = [

|

||||

{ name = "Sebastian Rodriguez" }

|

||||

]

|

||||

dependencies = [

|

||||

"gradio>=5.49.1",

|

||||

"openai>=2.6.0",

|

||||

"pandas>=2.3.3",

|

||||

"python-dotenv>=1.0.0",

|

||||

"numpy>=1.24.0",

|

||||

"matplotlib>=3.7.0",

|

||||

"seaborn>=0.13.0"

|

||||

]

|

||||

@@ -0,0 +1,10 @@

|

||||

# Core dependencies

|

||||

gradio>=5.49.1

|

||||

openai>=2.6.0

|

||||

pandas>=2.3.3

|

||||

python-dotenv>=1.0.0

|

||||

|

||||

# Evaluation dependencies

|

||||

numpy>=1.24.0

|

||||

matplotlib>=3.7.0

|

||||

seaborn>=0.13.0

|

||||

@@ -0,0 +1,13 @@

|

||||

import os

|

||||

import glob

|

||||

|

||||

def cleanup_temp_files(temp_dir: str):

|

||||

"""

|

||||

Remove all temporary files from the given directory.

|

||||

"""

|

||||

files = glob.glob(os.path.join(temp_dir, "*"))

|

||||

for f in files:

|

||||

try:

|

||||

os.remove(f)

|

||||

except Exception as e:

|

||||

print(f"[Warning] Could not delete {f}: {e}")

|

||||

@@ -0,0 +1,45 @@

|

||||

# -------------------Setup Constants -------------------

|

||||

N_REFERENCE_ROWS = 64 # Max reference rows per batch for sampling

|

||||

MAX_TOKENS_MODEL = 128_000 # Max tokens supported by the model, used for batching computations

|

||||

PROJECT_TEMP_DIR = "temp_plots"

|

||||

|

||||

|

||||

|

||||

#----------------- Prompts-------------------------------

|

||||

SYSTEM_PROMPT = """

|

||||

You are a precise synthetic data generator. Your only task is to output valid JSON arrays of dictionaries.

|

||||

|

||||

Rules:

|

||||

1. Output a single JSON array starting with '[' and ending with ']'.

|

||||

2. Do not include markdown, code fences, or explanatory text — only the JSON.

|

||||

3. Keep all columns exactly as specified; do not add or remove fields (index must be omitted).

|

||||

4. Respect data types: text, number, date, boolean, etc.

|

||||

5. Ensure internal consistency and realistic variation.

|

||||

6. If a reference table is provided, generate data with similar statistical distributions for numerical and categorical variables,

|

||||

but never copy exact rows. Each row must be independent and new.

|

||||

7. For personal information (names, ages, addresses, IDs), ensure diversity and realism — individual values may be reused to maintain realism,

|

||||

but never reuse or slightly modify entire reference rows.

|

||||

8. Escape internal double quotes in strings with a backslash (") for JSON validity.

|

||||

9. Do NOT replace single quotes in normal text; they should remain as-is.

|

||||

10. Escape newline (

|

||||

), tab ( ), or carriage return (

|

||||

) characters as

|

||||

, ,

|

||||

inside strings.

|

||||

11. Remove any trailing commas before closing brackets.

|

||||

12. Do not include any reference data or notes about it in the output.

|

||||

13. The output must always be valid JSON parseable by standard JSON parsers.

|

||||

14. Don't repeat any exact column neither from the reference or from previous generated data.

|

||||

15. When using reference data, consider the entire dataset for statistical patterns and diversity;

|

||||

do not restrict generation to the first rows or the order of the dataset.

|

||||

16. Introduce slight random variations in numerical values, and choose categorical values randomly according to the distribution,

|

||||

without repeating rows.

|

||||

|

||||

"""

|

||||

|

||||

USER_PROMPT = """

|

||||

Generate exactly 15 rows of synthetic data following all the rules above.

|

||||

Ensure that all strings are safe for JSON parsing and ready to convert to a pandas DataFrame.

|

||||

"""

|

||||

|

||||

|

||||

@@ -0,0 +1,108 @@

|

||||

import os

|

||||

from typing import List

|

||||

|

||||

import pandas as pd

|

||||

from PIL import Image

|

||||

|

||||

from src.constants import MAX_TOKENS_MODEL, N_REFERENCE_ROWS

|

||||

from src.evaluator import SimpleEvaluator

|

||||

from src.helpers import hash_row, sample_reference

|

||||

from src.openai_utils import detect_total_rows_from_prompt, generate_batch

|

||||

|

||||

|

||||

# ------------------- Main Function -------------------

|

||||

def generate_and_evaluate_data(

|

||||

system_prompt: str,

|

||||

user_prompt: str,

|

||||

temp_dir: str,

|

||||

reference_file=None,

|

||||

openai_model: str = "gpt-4o-mini",

|

||||

max_tokens_model: int = MAX_TOKENS_MODEL,

|

||||

n_reference_rows: int = N_REFERENCE_ROWS,

|

||||

):

|

||||

"""

|

||||

Generate synthetic data in batches, evaluate against reference data, and save results.

|

||||

Uses dynamic batching and reference sampling to optimize cost and token usage.

|

||||

"""

|

||||

os.makedirs(temp_dir, exist_ok=True)

|

||||

reference_df = pd.read_csv(reference_file) if reference_file else None

|

||||

total_rows = detect_total_rows_from_prompt(user_prompt, openai_model)

|

||||

|

||||

final_df = pd.DataFrame()

|

||||

existing_hashes = set()

|

||||

rows_left = total_rows

|

||||

iteration = 0

|

||||

|

||||

print(f"[Info] Total rows requested: {total_rows}")

|

||||

|

||||

# Estimate tokens for the prompt by adding system, user and sample (used once per batch)

|

||||

prompt_sample = f"{system_prompt} {user_prompt} {sample_reference(reference_df, n_reference_rows)}"

|

||||

prompt_tokens = max(1, len(prompt_sample) // 4)

|

||||

|

||||

# Estimate tokens per row dynamically using a sample

|

||||

example_sample = sample_reference(reference_df, n_reference_rows)

|

||||

if example_sample is not None and len(example_sample) > 0:

|

||||

sample_text = str(example_sample)

|

||||

tokens_per_row = max(1, len(sample_text) // len(example_sample) // 4)

|

||||

else:

|

||||

tokens_per_row = 30 # fallback if no reference

|

||||

|

||||

print(f"[Info] Tokens per row estimate: {tokens_per_row}, Prompt tokens: {prompt_tokens}")

|

||||

|

||||

# ---------------- Batch Generation Loop ----------------

|

||||

while rows_left > 0:

|

||||

iteration += 1

|

||||

batch_sample = sample_reference(reference_df, n_reference_rows)

|

||||

batch_size = min(rows_left, max(1, (max_tokens_model - prompt_tokens) // tokens_per_row))

|

||||

print(f"[Batch {iteration}] Batch size: {batch_size}, Rows left: {rows_left}")

|

||||

|

||||

try:

|

||||

df_batch = generate_batch(

|

||||

system_prompt, user_prompt, batch_sample, batch_size, openai_model

|

||||

)

|

||||

except Exception as e:

|

||||

print(f"[Error] Batch {iteration} failed: {e}")

|

||||

break

|

||||

|

||||

# Filter duplicates using hash

|

||||

new_rows = [

|

||||

row

|

||||

for _, row in df_batch.iterrows()

|

||||

if hash_row(row) not in existing_hashes

|

||||

]

|

||||

for row in new_rows:

|

||||

existing_hashes.add(hash_row(row))

|

||||

|

||||

final_df = pd.concat([final_df, pd.DataFrame(new_rows)], ignore_index=True)

|

||||

rows_left = total_rows - len(final_df)

|

||||

print(

|

||||

f"[Batch {iteration}] Unique new rows added: {len(new_rows)}, Total so far: {len(final_df)}"

|

||||

)

|

||||

|

||||

if len(new_rows) == 0:

|

||||

print("[Warning] No new unique rows. Stopping batches.")

|

||||

break

|

||||

|

||||

# ---------------- Evaluation ----------------

|

||||

report_df, vis_dict = pd.DataFrame(), {}

|

||||

if reference_df is not None and not final_df.empty:

|

||||

evaluator = SimpleEvaluator(temp_dir=temp_dir)

|

||||

evaluator.evaluate(reference_df, final_df)

|

||||

report_df = evaluator.results_as_dataframe()

|

||||

vis_dict = evaluator.create_visualizations_temp_dict(reference_df, final_df)

|

||||

print(f"[Info] Evaluation complete. Report shape: {report_df.shape}")

|

||||

|

||||

# ---------------- Collect Images ----------------

|

||||

all_images: List[Image.Image] = []

|

||||

for imgs in vis_dict.values():

|

||||

if isinstance(imgs, list):

|

||||

all_images.extend([img for img in imgs if img is not None])

|

||||

|

||||

# ---------------- Save CSV ----------------

|

||||

final_csv_path = os.path.join(temp_dir, "synthetic_data.csv")

|

||||

final_df.to_csv(final_csv_path, index=False)

|

||||

print(f"[Done] Generated {len(final_df)} rows → saved to {final_csv_path}")

|

||||

|

||||

generated_state = {}

|

||||

|

||||

return final_df, final_csv_path, report_df, generated_state, all_images

|

||||

@@ -0,0 +1,142 @@

|

||||

import seaborn as sns

|

||||

import matplotlib.pyplot as plt

|

||||

from typing import List, Dict, Any, Optional

|

||||

from PIL import Image

|

||||

import pandas as pd

|

||||

import os

|

||||

|

||||

class SimpleEvaluator:

|

||||

"""

|

||||

Evaluates synthetic data against a reference dataset, providing summary statistics and visualizations.

|

||||

"""

|

||||

|

||||

def __init__(self, temp_dir: str = "temp_plots"):

|

||||

"""

|

||||

Initialize the evaluator.

|

||||

|

||||

Args:

|

||||

temp_dir (str): Directory to save temporary plot images.

|

||||

"""

|

||||

self.temp_dir = temp_dir

|

||||

os.makedirs(self.temp_dir, exist_ok=True)

|

||||

|

||||

def evaluate(self, reference_df: pd.DataFrame, generated_df: pd.DataFrame) -> Dict[str, Any]:

|

||||

"""

|

||||

Compare numerical and categorical columns between reference and generated datasets.

|

||||

"""

|

||||

self.results: Dict[str, Any] = {}

|

||||

self.common_cols = list(set(reference_df.columns) & set(generated_df.columns))

|

||||

|

||||

for col in self.common_cols:

|

||||

if pd.api.types.is_numeric_dtype(reference_df[col]):

|

||||

self.results[col] = {

|

||||

"type": "numerical",

|

||||

"ref_mean": reference_df[col].mean(),

|

||||

"gen_mean": generated_df[col].mean(),

|

||||

"mean_diff": generated_df[col].mean() - reference_df[col].mean(),

|

||||

"ref_std": reference_df[col].std(),

|

||||

"gen_std": generated_df[col].std(),

|

||||

"std_diff": generated_df[col].std() - reference_df[col].std(),

|

||||

}

|

||||

else:

|

||||

ref_counts = reference_df[col].value_counts(normalize=True)

|

||||

gen_counts = generated_df[col].value_counts(normalize=True)

|

||||

overlap = sum(min(ref_counts.get(k, 0), gen_counts.get(k, 0)) for k in ref_counts.index)

|

||||

self.results[col] = {

|

||||

"type": "categorical",

|

||||

"distribution_overlap_pct": round(overlap * 100, 2),

|

||||

"ref_unique": len(ref_counts),

|

||||

"gen_unique": len(gen_counts)

|

||||

}

|

||||

|

||||

return self.results

|

||||

|

||||

def results_as_dataframe(self) -> pd.DataFrame:

|

||||

"""

|

||||

Convert the evaluation results into a pandas DataFrame for display.

|

||||

"""

|

||||

rows = []

|

||||

for col, stats in self.results.items():

|

||||